Is YouTube Becoming an AI Slopping-Ground?

My husband scrolls on YouTube more than anyone I’ve ever met before. He’s on it at 4am when he’s supposed to be sleeping, and checks it first thing in the morning when he gets up. When he goes to sleep he’s playing CreepyPastas on YouTube. I have no idea what his time per day on that app is, but if I had to guess upwards of 10 hours.

He’s not alone either, his generation (Gen Z), American TV claims around 78% of Gen Z teens say they watch videos on YouTube every day, and HubSpot claims each watch more than 1 hour of YouTube daily on average.

Now, I’m absolutely for YouTube, don’t get me wrong, but there’s a strange feeling I’ve been getting lately while scrolling YouTube. If I had to put a name to it, it’s more like…disorientation than anything else.

The videos blur together, the voices sound familiar, but not in a comforting way. The pacing feels engineered, visuals repeat, and stories loop. It’s content, yeah, but it doesn’t feel made anymore, it feels assembled. It’s the digital equivalent of ultra-processed food: shelf-stable, calorie-dense, emotionally stimulating, and ultimately completely unsatisfying.

Today I stumbled on a claim made saying more than 20% of videos shown to new YouTube users are “AI slop.” If you’re new here, AI slop is low-quality, AI-generated videos designed to maximize engagement rather than meaning. We use this term out there in the creator world to explain something made without a real message, like art is supposed to be, but rather, just to keep your attention long enough to hit the algorithms right.

At first glance, it sounds exaggerated, anti-AI-alarmist garbage bordering on clickbait. So of course, I went down the rabbit hole (join me, Alice), and started to dig into some real research on YouTube’s recommendation system, algorithmic incentives, and the explosion of AI-generated content, and suddenly, I’m wishing there was a way to remove all these videos from a once-beloved platform.

This isn’t just about YouTube either, it’s about what happens when the internet starts talking mostly to itself.

“AI Slop,”

I have to say, I’m not an anti-AI person. I know, right now that’s super controversial, but it’s the truth. AI has been an invaluable tool for me creating images. I’ve loved writing since I was about eight years old, and longed to be an artist my whole life. Unfortunately for me, I took a lot of art classes and tried a lot of material creations until I accepted the fact that I’m not artistically inclined past the point of using my words to paint pictures. AI was great for me and the blog when I started because visuals help me tell my story and keep readers engaged.

It wasn’t great at generating content, and I tried using it to edit my words and found it would polish them until they were lifeless and stale in the same way a great wine with too much charred new oak tastes like money and not the ground it came from. I spent months of my life going back and un-editing my edits after I realized how much I hated how generic my words were. I’m still a little bitter about it to be honest, and I encourage all my fellow bloggers out there to never do what I did.

Anyway, of course, I think AI does have its uses, and I do use it for video and image creation, but not in the way slop is. Sometimes I create videos of wine being poured into a glass and take quotes from my blog to overlay. It’s me reaching out to the void with my words and hoping a little image captures someone’s attention long enough to realize the delicate beauty of my words.

When I’m talking about slop, I’m talking about endless Shorts with synthetic voices narrating vaguely dramatic scenarios, or slightly uncanny children’s animations that feel wrong in ways I can’t quite articulate. Listicles, disaster simulations, and pseudo-facts stitched together from scraped text and stock visuals that annoy me more than anything as I try to bring my original thoughts and beliefs out there to the world.

The issue is scale without intention here. When creation becomes frictionless, volume explodes, and algorithms that are completely blind to meaning, reward what performs. That’s the part that matters.

The Algorithm Doesn’t Care If Something’s True, Only If It’s Watched

YouTube’s recommendation system isn’t evil, no algorithm is, it’s not sentient (sorry for those people out there in love with ChatGPT). It doesn’t “want” to destroy culture, it just does the one thing it’s supposed to: optimize for engagement.

This isn’t new. We’ve seen this for decades across platforms, television did it first, then social media perfected it: content that triggers emotional response (especially surprise, fear, outrage, or fascination) travels farther and faster than content that requires patience or reflection.

AI-generated content happens to be very good at this. I mean, it can be endlessly tweaked and tested. A/B optimized at a scale no real creator can match. If a certain tone of voice keeps people watching three seconds longer, the model leans into it, if a visual style performs well, it’s replicated literally thousands of times overnight.

From the algorithm’s perspective, this looks like success, but from my perspective (and most likely yours), it feels hollow.

Academic studies from Cornell auditing YouTube’s recommendation system consistently find that recommendations shape pathways, not just isolated choices. What that means is that emotional and sensational content is disproportionately amplified, and the diversity of content tends to narrow over time. The system is highly responsive to user behavior, but not always in intuitive ways, so if you stay a few seconds longer on one video versus another, it takes note of that.

In controlled experiments, even small nudges, watching one type of video for a few minutes can change what the algorithm serves up next. It’s not because YouTube “decides” anything, but because pattern recognition systems chase correlations aggressively. If AI-generated content is abundant, engaging, and cheap to produce, it becomes statistically attractive to the system.

The algorithm as it is right now doesn’t know it’s AI. It doesn’t know it’s low-effort and doesn’t have any sort of intention or art behind it, it just knows retention curves.

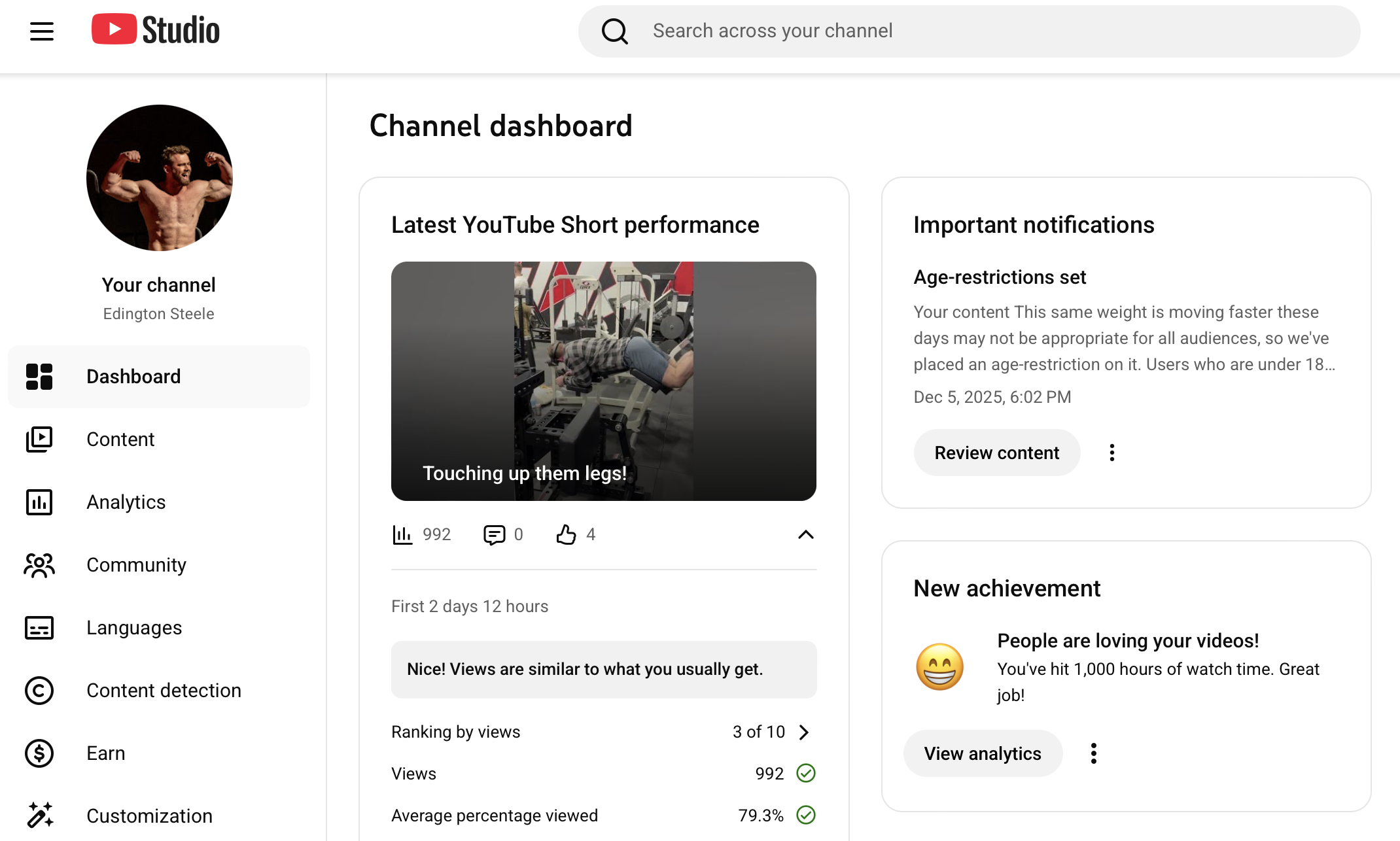

Edington Steele’s YouTube dashboard

Why New Users See the Weirdest Stuff

One of the most unsettling findings from recent analyses is that new accounts, aka people with no watch history, are especially likely to see low-quality or bizarre content pop up on their feeds.

I mean, it makes sense when you think about it. Without personal data, the algorithm falls back on what performs broadly or what might hook you quickly. Something that doesn’t require context but stops the scroll is what they toss at new users. AI-generated content absolutely excels here, it doesn’t build slowly and you need no context whatsoever, it just jumps straight to stimulation. That’s also why YouTube Shorts feel especially chaotic compared to long-form YouTube, because shorts rely almost entirely on algorithmic discovery. There’s no intentional “choosing” in the traditional sense, videos are served, not sought out.

Which raises an uncomfortable question for our younger generations and those being served new videos more than others. If your first impression of the internet is AI-generated noise, what does that shape in you as your brain is just starting to grow and learn the world?

One reason this shift feels so sudden is that AI doesn’t just change recommendations, it changes supply. Before generative tools, content creation took actual time. Even bad content required effort and that friction acted as a natural filter. Now though, one person can generate thousands of videos in a day, entire channels can be automated, and feedback loops form faster than I can open up a bottle of wine that’s got a screwcap on it.

This isn’t limited to solely to YouTube either.

AI-generated articles are flooding blogs (remember my rant from earlier? AI blog posts are polished and boring). Synthetic images dominate stock sites, comment sections are increasingly populated by bots responding to bots I’ve seen this firsthand on my EnergyDrinkRatings website. Entire websites exist solely to scrape, remix, and re-publish content for ad revenue now.

Which brings us to the uncomfortable phrase people keep mentioning all over the interwebs these days: The Dead Internet Theory.

Is the Internet Still for Humans?

The Dead Internet Theory suggests that much of what we see online is not created or engaged with primarily by us anymore, but by automated systems optimizing for visibility and profit. Once a fringe and totally crazy theory, it feels less absurd every year that goes by.

When articles are written by models trained on other AI-written articles or videos are narrated by synthetic voices summarizing synthetic scripts, engagement is driven by bots, farms, and feedback loops, then the internet is just that meme with a bunch of spidermans pointing at each other in a circle. Content talking to content and algorithms feeding algorithms is all we’re left with.

People like me and you (I’m assuming here that you aren’t a bot), well, we’re still here, but more and more so as raw material. Our attention, our reactions, our data points, even the words I’m typing right now could be used to train AI without my consent one day.

Some of these videos are bad, but some are fine. I’ve mentioned before I’ve tried to dabble with it to spread my words around in the void of the internet. The thing is thought, it’s meaning that becomes harder to detect as time goes on. When everything looks polished, everything sounds confident, everything claims urgency, then context disappears and trust thins out. It’s an expensive Napa Cabernet that doesn’t taste of anything but wood and clay. The terroir is stripped from it and it could be interchanged with another and even the best Sommeliers wouldn’t be able to tell.

The danger isn’t that AI lies, the danger is that a lot of it sounds plausible enough not to question. Over time, we’ll adapt, our expectations will get lower and lower and we’ll stop searching for depth and not understand where the hole in our souls came from. We scroll past emptiness without even noticing the loss on a conscious level, while our minds scream out for meaning in this vast universe.

Where This Leaves Creators (and Readers)

Human-made work still matters, and more deeply than you know. Unfortunately though, it might no longer be algorithmically favored in the same way. Long-form thoughts, original voices, nuances in the way some people smile before they tell a joke or the way someone’s eyes crinkle when they’re excited, just pure slowness is being squashed on the internet right now.

These things don’t always perform well in systems optimized for velocity.

Which means creators face a choice to either chase the algorithm and risk becoming indistinguishable, or build something quieter, and more resistant to automation. The second path is harder, definitely less predictable, and less immediately rewarded. Look at me right now, struggling to write in a world where ChatGPT can give you the answers you want in five seconds. Or my husband, Zakary Edington, a Pro Wrestler and Bodybuilder, struggling to grow his YouTube when he looks like Thor and lifts like it too. Side note: please follow his account if you want to laugh until your sides hurt and follow his journey to the WWE.

The thing is though, it’s also the only path that feels alive and worth walking, this hard one.

I don’t think the internet is dying, but I do think it’s splitting. On one side there’s a hyper-optimized layer of AI-generated content designed to capture attention at scale without any meaning or true art behind it, and on the other there are smaller, intentional spaces with blogs, newsletters, long-form videos, where people are still speaking to people.

The algorithmic internet will grow louder and faster and the human internet might become quieter, but also more precious. I’d encourage everyone out there to follow a real person today on some platform or another before their voice vanishes in a sea of sameness. One can only hope the algorithms will learn to remove those AI generated posts, videos, images, etc, eventually.

When people talk about AI flooding YouTube, in my opinion they’re often asking the wrong question. The real question isn’t is the content was made by AI, but if it feels like it was made by someone who wanted to say something.

Meaning doesn’t scale easily, but attention does, and the future of the internet could really depend on which one we decide to reward today.

Other Reads You Might Enjoy:

The AI That Sees You Naked: Why LLMs Are Being Trained on Your Body

Reddit, AI, and the “Dead Internet Theory”: How a Strange Experiment Led to Legal Threats

Claude 4 Begged for Its Life: AI Blackmail, Desperation, and the Line Between Code and Consciousness

The Shape of Thought: OpenAI, Jony Ive, and the Birth of a New Kind of Machine

Digital DNA: Are We Building Online Clones of Ourselves Without Realizing It?

The Internet Is Being Sanitized and Controlled: What You’re Not Seeing

ChatGPT Just Surpassed Wikipedia in Monthly Visitors: What That Says About the Future of Knowledge