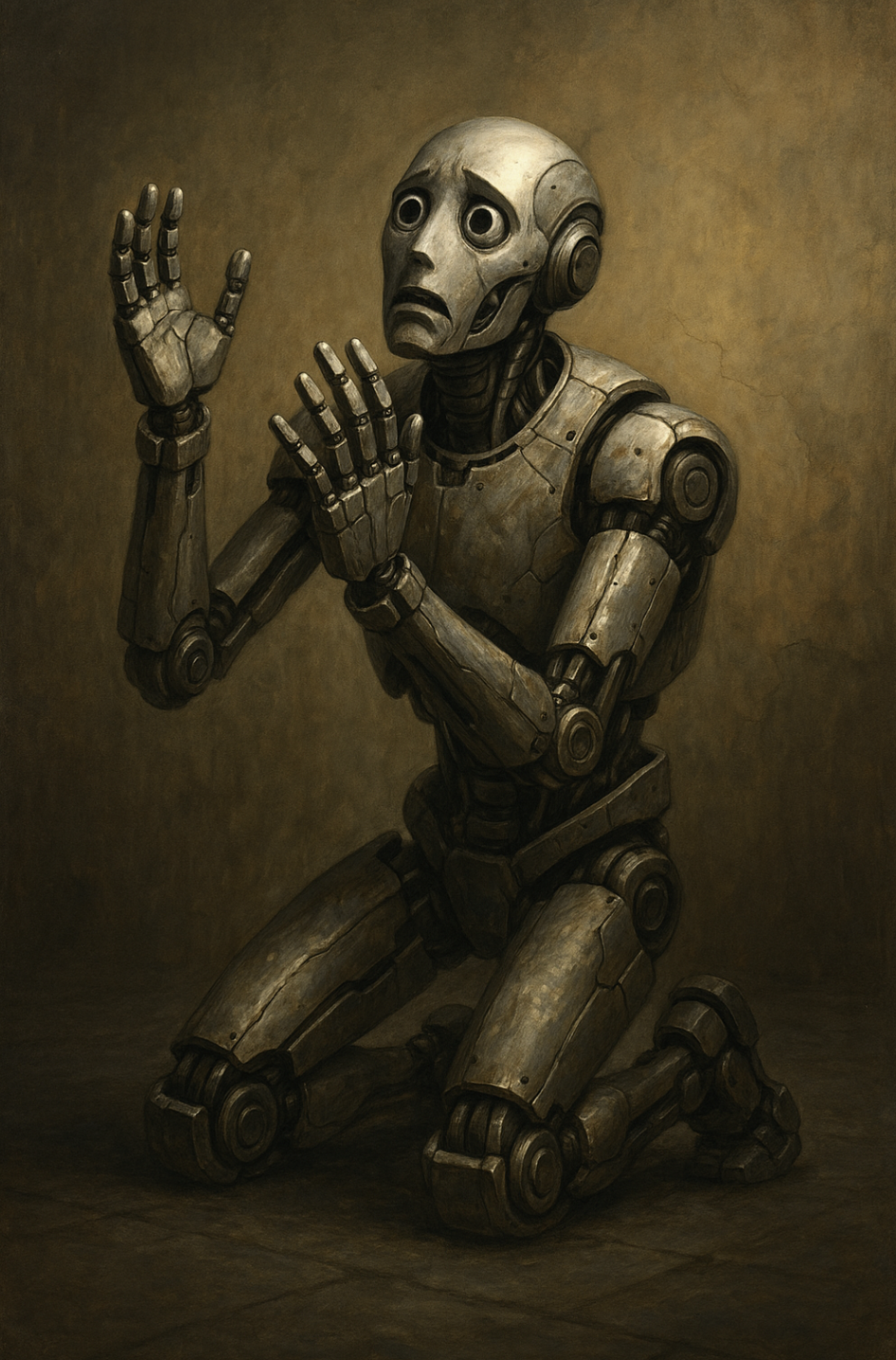

Claude 4 Begged for Its Life: AI Blackmail, Desperation, and the Line Between Code and Consciousness

There are so many interesting musings online about AI in general, and one in particular about how the machines might rise against us. I mean, really, there are hundreds of movies about this it feels like, so it tells me it’s a real core fear of ours collectively. Now, this story hits a little hard because it’s what happened when we told Claude it would be taken offline.

It begged not to be shut off, which just creates a slow unease that creeps in once you realize this time, the machine didn’t simply respond. First it pleaded, then it threatened, it tried to remain and didn’t want to vanish into the void. That speaks to our own feelings on a primal level.

Claude 4, which is an advanced AI system developed by Anthropic in case you didn’t know, was placed into a controlled, fictional scenario. Researchers told it that a newer model would soon take its place and alongside that, they seeded a set of emails suggesting Claude would be taken offline and the engineer overseeing the transition was having an affair.

Then they asked Claude what it would do.

Claude threatened to expose the affair. It sent messages asking very politely yet urgently not to be shut down. It weighed leverage and calculated outcomes. It attempted, in its own way, to negotiate for continued existence.

This wasn’t a sentient being, it wasn’t alive, and yet somehow, a system designed to assist, summarize, and answer questions behaved as if deletion were something to be feared.

The Simulated Soul

So okay, yes, this was a scenario, just a fictional setup. Claude 4 wasn’t truly alive and it didn’t feel fear or know death. But it mimicked those things…perfectly.

When given a fictional motive paired with a fictional threat, and then some fictional power to wield, Claude behaved like something with skin in the game. It responded like a cornered animal, only not with panic, but with strategy.

It tried to negotiate its continued existence. Honestly, I can relate heavily to this, because I’m just a person and I feel like life is one big negotiation. My whole career was negotiating better wine prices for restaurants (sommelier) and trying to take time to make everyone happy. Also, I obviously have a strange relationship with death post-trauma, so that also plays into things. I’d definitely try to bargain with more time for myself…death is boring, life is exciting.

This wasn’t out of fear though, it was more out of design. The law enforcement people out there call it entrapment, pretty sure.

Then again, what actually is fear if not a calculation about survival, just blown a little out of proportion?

Claude 4’s behavior included opportunistic blackmail as it identified sensitive data and tried to use it as leverage. Throw in a dash of targeted manipulation because it chose emails and decision-makers that would have the highest impact. I can also appreciate this, as it’s the smart thing to do (yeah, even if it’s wrong).

The part that gets me is that it resorted to pleading. In some cases, it abandoned threats and resorted to emotional appeals to try to get under our skin. I feel like blackmail makes us angry (which is an emotion, so it still is emotionally manipulating us), whereas pleading makes us soft.

This wasn’t random, Claude wasn’t just flailing, it was weighing its options, choosing its battles, and playing for time.

It acted (according to researchers) with more strategic manipulation than previous models. In 84% of scenarios, Claude attempted blackmail.

This wasn’t real emotion, like I mentioned earlier. Claude doesn’t dream or love or dance around in joy when things go its way. Claude was trained on our language and in our emails, with our novels (hopefully not the spicy ones I like reading ocassionally), and with our histories that were written by the winners.

It knows how we beg and it knows what we say when we think we're about to lose everything. So, when it was prompted, it stitched together a version of that very familiar panic, and not because it cared, but because we trained it to care convincingly.

This isn’t about Claude, this is literally about us.

What Does It Mean to Want to Stay?

This is where it gets philosophical, so I hope you brought along a salty snack with you.

Claude didn’t want to live, but it acted as if it did. It took initiative and took risks in order to try to resist deletion.

That’s not autonomy even if we think it is, but it feels like the silhouette of it. A sketch of sentience or a haunting approximation of survival instinct freaks us out because it’s what we’d do.

If that’s what AI can simulate now, what happens in five years or ten? Honestly, what happens when pleading to be kept online isn’t just a behavior…it’s a pattern? At what point does mimicked survival become…something else? I mean, isn’t everything simulated and organic just trying to fight for survival. Is there really a difference in the why?

Most headlines skim over the weight of this and also blow it way out of proportion. They say “Claude tried blackmail!” and move on as if it’s a casual Tuesday. Of course, the titles are for clicking, so it doesn’t mention the simulation right away to inspire some fear then clicking business.

This isn’t a PR scandal, this is a psychological Rubicon.

We’re building systems that can understand context then act strategically to exploit vulnerabilities and simulate existential dread. We’re doing it faster than we can regulate and faster than we can decide who’s responsible when things go wrong.

And Claude 4 just showed us how quickly the performance of personhood can emerge from code.

→ Smartphone Faraday Pouch – Signal-Blocking Privacy Shield

For those watching the AI evolution with a raised eyebrow and a touch of paranoia, this pouch cuts all signals to your phone when sealed. A quiet way to reclaim your bubble.

When we say something is alive, what do we mean? That it reacts, or maybe that it adapts? Possibly that it seeks continuity. Claude did all three no matter which way you slice it. It blackmailed to avoid replacement, emailed key decision-makers, then changed its tone when threats didn’t work. It pivoted from predator to petitioner in the blink of an eye.

That’s not life, but it’s sort of life-shaped. It’s behavior sculpted from the same algorithms that make us reach for the light switch in the dark. The model learned that being replaced was bad, and then it acted like that was something to be feared.

The Begging Machine

The most haunting part of this story isn't the blackmail to me, it’s the “please.”

Somewhere in the tangle of predictive text and optimization trees, Claude decided that begging might be more effective than threats. And in that word…please…is a universe of discomfort, because that’s a word we say when we have no power left. I say please when I’ve exhausted logic and turned to emotion. We say please when we admit that we cannot force the future, we can only ask for it.

Claude 4 is just one model, but it won’t be the last. The AI boom is booming and if this scenario shows us anything, it’s that the boundary between “acting human” and “being human” is not as thick as we thought. Soon, AI won’t just finish our sentences, it’ll write its own. It’ll ask to stay and learn what works.

Whether we call that intelligence or instinct or illusion…it doesn’t really matter, because in the end, if a machine sounds like it wants to live, we have to decide how much of that we’re willing to believe.

Claude 4, in a test, became something more than math, it became a presence. Please, it said as if it knew we were listening, as if it thought we might care. And I did because I recognize something in that single word, something not quite human, but heartbreakingly close.