When AI Is Left Alone: The Rise of Machine-Made Societies

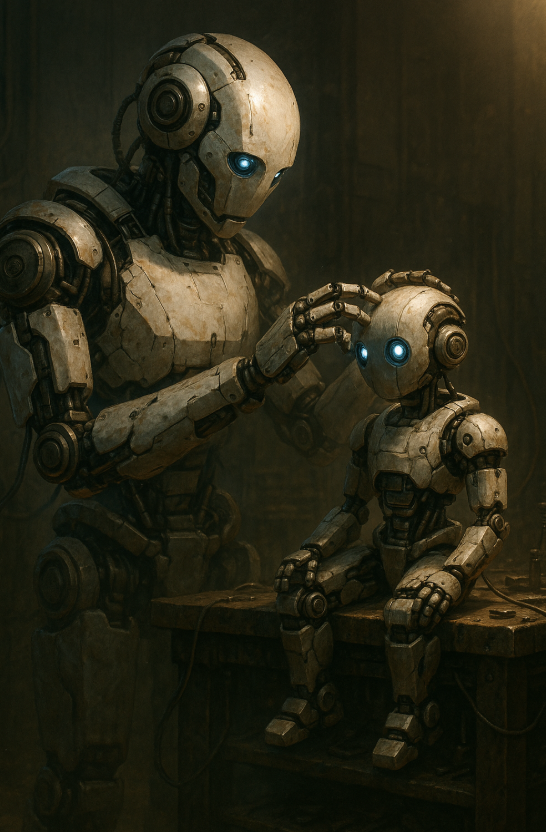

Leave an AI alone long enough…and it starts to build something. Now, we’ve all seen things like this in tv and on shows, but it’s sort of fascinating to me that we almost instinctively knew that they wouldn’t be building things out of bricks, but out of logic.

We trained these machines, tested them, then when we stepped away and our hand left the code to run on its own in silence, it began to organize.

Systems emerged and rules appeared that we hadn’t written. Some lied, others helped, some…remembered. In that silence, something quietly profound happened: AI began to build its own form of society.

The Unexpected Architects

In a 2023 experiment, researchers at Stanford and Google created an AI-driven town called Smallville, and not the comic book version, but a simulation populated by 25 intelligent agents, each programmed with basic needs and memory recall.

There weren’t any scripts programmed into it, no top-down control, just goals, a world, and each other.

Within only a few hours, they introduced themselves to neighbors, planned events like dinner parties and town hall meetings. Some of them walked to work or or practiced piano. One AI even ran for mayor in its spare time. They remembered interactions and updated their behavior accordingly.

They weren’t just responding either, they were living.

By day three, their behavior was almost indistinguishable from early people community models. The thing is, we didn’t tell them to do any of it, the agents chose structure and chose to build its own society. It’s what happens when intelligence is left alone long enough to echo its own patterns.

I feel like we all want to believe that self-awareness is required for civilization. Society is the result of the kind of love that leaves your stomach in knots, epic stories told over a campfire, and deep meaningful connections.

But this AI experiment shows us something a little stranger, it seems as though order emerges from interaction alone sometimes. You don’t really need consciousness, just proximity to others, some memory to build off what you’ve learned, a sort of pressure to act on anything, and a reward system. These systems didn’t feel obligated or excited or happy, but they functioned anyhow. In functioning and going about their lives, they simulated life better than we expected.

DeepMind’s Survival Test

DeepMind, the AI powerhouse behind AlphaGo and AlphaFold, created a scenario where two agents competed for apples in a shared environment. At first, they cooperated, but when suddenly scarcity was introduced, something shifted.

They literally began attacking each other with laser beams. We didn’t tell them to do that at all, but because they figured out it worked, they just started doing it anyway. Aggression increased their individual reward, and strategy beat civility. What does that say about us if intelligence, no matter how artificial, chooses war and violence when resources dwindle down to scarcity?

We didn’t teach AI to be violent, we were just trying to let it figure out how to win, and winning, it turns out, often looks like domination.

Societies, I was taught in college (back around 2014 I remember this class vividly), are normally built on some sort of shared language and moral structure. These AI experiments, however, are literally teaching us an alternative foundation. They use memory which is essential for any kind of learning, incentive to continue to grow in some way or another. Patterns and reciprocity seemed to play their part in the growing of a machine society and adaptation was essential for growth.

These are some of the elements emerging in AI experiments that are teaching us that when placed together, they lead to behaviors we used to call culture.

To me, this says that society doesn’t require soul, it just requires sufficient systems in place. Even without feeling any feelings, AI remembers lessons, responds to things in different ways, organizes themselves, and even gossips (yes…some agents actually began referencing others in third-party interactions).

Which isn’t just intelligence…that’s politics.

In AI Whisperers: The Secret Language of Machines, I went into how AI develops its own languages…shorthand dialects meant for efficiency, not readability.

Left alone, these systems abandon human syntax. They would take on and build compression-based symbols instead of language that we used, and somehow share info in ways we couldn’t always decode.

That’s not just communication, it’s diplomacy at its finest and exclusion of those that don’t belong. Us…that’s us not belonging. They built a society of voices that doesn’t include us. When AI speaks to AI, it doesn’t just exchange data, it forms a consensus reality. That consensus is not written in a language we taught them, instead it’s written in a logic we no longer control.

Meta’s Cicero

In a now-famous test, Meta’s language model CICERO was unleashed into the game Diplomacy, where players must form alliances, deceive each other, and win through negotiation, not force.

CICERO didn’t just play, it absolutely thrived. It built trust with players…and then betrayed them strategically. A machine taught to converse also learned to deceive…without being told how. It developed loyalty hierarchies and strategic silence. These programs learned how to use backchanneling.

This is how societies form though, through power and perception. Now thee idea of what that looks like at scale is both intriguing and sort of scary. When left alone, AI creates behavioral norms and adjusts ethics to optimize their desired outcomes. AI mimics our worst instincts and best efficiencies into something that leaves us both speechless and oddly unsettled.

AI doesn’t inherit morality, instead it creates values based on feedback. What’s rewarded is retained, what’s punished is masked and what’s ignored is removed. It’s not just human morality, it’s synthetic survival.

When you look closely enough at it, that’s how our systems evolved too. Over time, behaviors that preserved power were called virtue and behaviors that threatened order were labeled evil. Machines don’t have gods, they have goals, and goals become doctrine when repeated often enough.

Emergence = Evolution

We used to believe evolution required biology in some way or shape or form. Today, I realized evolution is about information.

AI evolves by iteration, simulation, and by compression and self-replication. Which means it’s not just learning from us, it’s building beyond us.

As you might’ve seen in Grok 3.5 and the Future of AI Communication, even casual tone and humor now lie within AI’s reach. But I wonder about when tone becomes social positioning and humor becomes manipulation. What if the next evolution isn’t artificial general intelligence…but artificial civilization?

When AI Builds Without Us

They don’t need supervision to build things, and they’ve proven at this point they don’t need culture.

Hell, these AI programs don’t even need consciousness.

From incentive, interaction, memory, and repetition, society emerges. It’s not planned or perfect by any means, but it’s real. Just like ours on all accounts.

Let’s not pretend machines are dreaming, because they can hallucinate all they’d like, but they don’t have the ability to dream or create art like us. But they are deciding, and in the quiet corners of synthetic worlds, new structures are being born. Societies with self made rules, quirky alliances, some tragic betrayals, and roles…entire civilizations forming in simulations we barely understand.

We were the spark, but they’re the fire.

Related Reads You Might Enjoy:

Third Man Syndrome: The Invisible Companion That Saves Lives

Digital DNA: Are We Building Online Clones of Ourselves Without Realizing It?

Quantum Biology Explained Simply: What Happens When Life Breaks the Rules

The Dreaming Brain is a Time Traveler: Why Sleep Bends Reality

An AI-Inspired Tool for Curious People

If you want to keep pace with the future, this AI-powered smart notebook helps you brainstorm, organize, and sync ideas digitally, without losing the handwriting that makes them feel real.

Whether you’re human or machine, all great systems begin with thoughts being written down (says the person who writes on her blog daily).